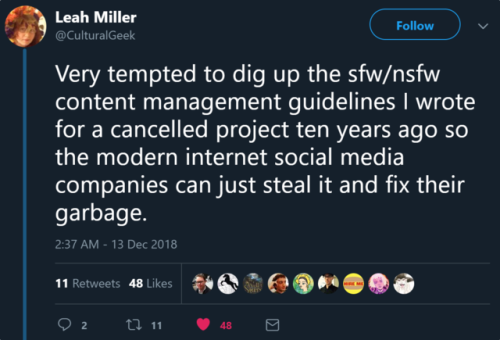

transphysics:maxofs2d: Great thread about online moderation. Source / link in the last tweet. Text:

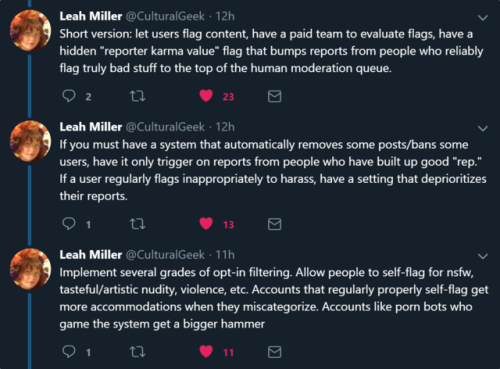

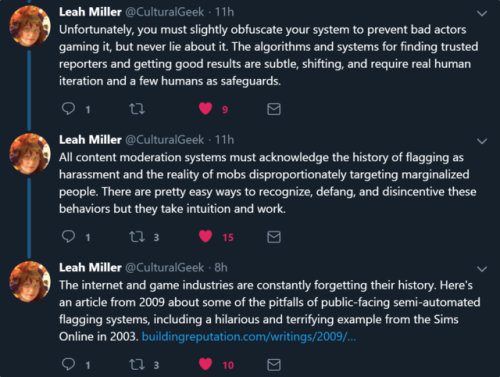

transphysics:maxofs2d: Great thread about online moderation. Source / link in the last tweet. Text: very tempted to dig up the sfw/nsfw content management guidelines I wrote for a canceled project 10 years ago so the modern internet social media companies can just steal it and fix their garbage. Short version: let users flag content, have a paid team to evaluate flags, have a hidden “reporter karma value” flag that bumps reports from people who reliably report truly bad stuff to the top of the human moderation queue. If you must have a system that automatically removes some posts/bans some users, have it only trigger on reports from people who have built up good “rep”. If a user regularly flags inappropriately to harass, have a setting that deprioritizes their reports. Implement several grades of opt-in filtering. Allow people to self-report for nsfw, tasteful/artistic nudity, violence, etc. Accounts that regularly correctly self-flag get more accommodations when they miscategorize. Accounts like porn bots which game the system get a bigger hammer.Unfortunately, you must slightly obfuscate your system to prevent bad actors gaming it, but never lie about it. The algorithms for finding trusted reporters and getting good results are subtle, shifting, and require real human iteration and a few humans as safeguards. All content moderation systems must acknowledge the history of flagging as harrassment and the reality of mobs disproportionately targeting marginalized people. There are pretty easy ways to recognize, defang, and deincentivize these behaviors but they take intuition and work. The internet and game industries are constantly forgetting their history. Here’s an article from 2009 about some of the pitfalls of public-facing semi-automated flagging systems, including a hilarious and terrifying example from Sims Online in 2003. -- source link

Tumblr Blog : tumblr.maxofs2d.net