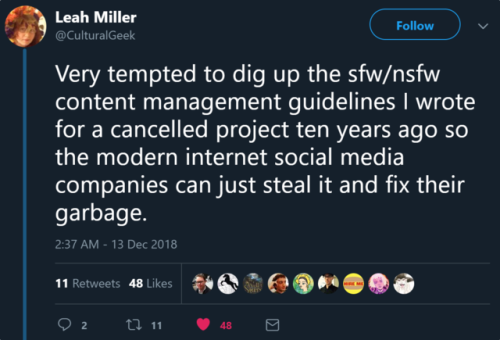

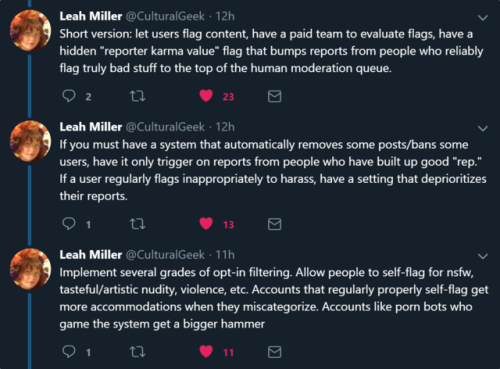

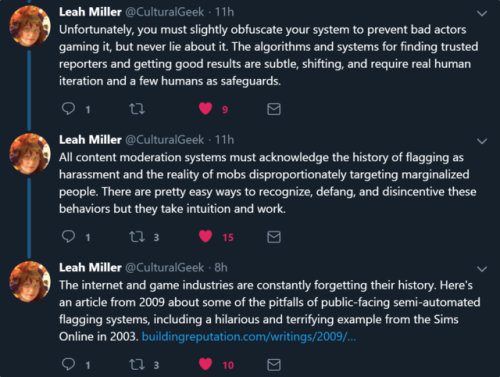

maxofs2d:Great thread about online moderation. Source / link in the last tweet. “But we’

maxofs2d:Great thread about online moderation. Source / link in the last tweet. “But we’ll feed it all into an ‘AI’ and automate the WHOLE THING! BRILLIANCE!” I mean, Just ask Microsoft about what happens when you it wrong. : “But we’ll feed it a proper corpus for it to learn from!” Yeah, that’s code for “We’ll license the GPT-3 corpus and use that” which has the same problem that GPT-2 has, because it’s training data came from 12 years worth of internet crawls, and who the hell has time or resources to actually vet or examine that much data for problems. “modern” attempts at AI suffer from the GIGO problem- GARBAGE IN, GARBAGE OUT. Anything that’s 'powered by AI’ is going to have some serious (and un-fixable) bias problems, because no one thinks to vet the data they are feeding into what is essentially a black box with zero transparancy on how it arrived at it’s conclusions, and no way to correct the machine on incorrect conclusions. (We’ll not go into intentional meddling by the company who made the system.) It’s a problem I’ve seen with EVERY system that uses marketing language that states proudly “We use a state of the art AI to automate things!”; There’s no way to tell the algorythmn it fucked up, no way to figure out how it got to that fucked up conclusion, and no way to correct it. Considering the debacle this hellsite has with “female presenting nipples” a few years ago, you’ll forgive me if I’m instantly suscpicious with any sort of automated content flagging systems. (See Also the current slow motion train wreck with YouTube’s ContentID system, and the fraud and abuse that it is shot through and impossible to have any sort of meaningful interaction with.) -- source link